8/22/2024

Understanding the Language Models Behind ChatGPT

ChatGPT has been creating quite a buzz since its debut. Produced by OpenAI, this chatbot marvel is based on an advanced foundation of language models that enables it to generate human-like responses in a conversation. To really get a grip on how this all works, let's dive deep into the techy side of things, including exploring the pioneering role of Large Language Models (LLMs). This blog will take you through the intricate world of language models, focusing on how they form the backbone of ChatGPT.

What Are Large Language Models?

Large Language Models (LLMs) are massively data-hungry systems that are designed to understand & generate text based on patterns learned from enormous datasets. Unlike traditional programming techniques that require specific instructions for tasks, LLMs operate on the concept of predictive modeling. More specifically, they predict the next word in a sentence given the preceding words.

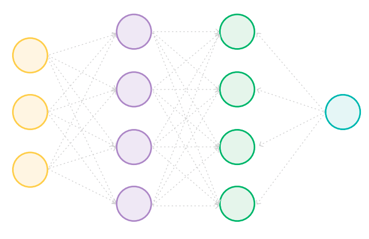

The development of LLMs hinges on a neural network architecture, particularly a model known as the transformer. Introduced in a 2017 paper by Google, transformers have captured significant attention due to their ability to efficiently process sequences of data (like text) using a mechanism known as self-attention. This structure allows the model to weigh the significance of various parts of an input sequence, making it adept at understanding context.

Transformer Architecture Explained

The transformer architecture consists of a series of interconnected layers that help a model learn the nuances of human language. At its core are two essential components: the encoder & the decoder.

- Encoder: Processes input data (text) & creates an internal representation that captures the meaning of the words and their relationships.

- Decoder: Uses the encoder’s output to predict the next word in a sequence, generating text that sounds coherent & human-like.

Self-Attention Mechanism

One of the key innovations of the transformer model is the self-attention mechanism. This allows the model to examine other words in the input sequence and establish relationships between them. For example, in a sentence like, “The cat sat on the mat because it was comfortable,” the self-attention mechanism helps the model identify that 'it' refers back to 'the mat' rather than 'the cat.' This capability improves the understanding of context significantly, which is crucial for generating human-like text responses.

The Training Process: A Roller Coaster Ride

Training an LLM like ChatGPT isn’t a walk in the park; it involves several stages. Here are the main stages involved in getting ChatGPT prepped & ready for action:

1. Generative Pre-Training

In this initial stage, the model is fed vast amounts of text from diverse sources—like books, websites, articles, etc.—without any specific instruction. Think of it as an intensive reading course, where the model learns to understand patterns and structures in the language.

2. Supervised Fine-Tuning (SFT)

After acquiring a general understanding of language during the pre-training phase, the model undergoes fine-tuning with labeled datasets. This task focuses on optimizing the model to produce more relevant responses based on specific scenarios.

3. Reinforcement Learning from Human Feedback (RLHF)

This advanced step involves human raters who evaluate the quality of responses generated by the model. The feedback is then used to refine the model further—making it more adept at responding in ways that humans find satisfactory. Here is where the real magic happens: the model learns from its mistakes!

ChatGPT's Training Data

It's essential to mention that the training data comprises millions of text examples from multiple genres, which helps ensure diverse conversational skills. The model is not pulled from memory like a database but rather uses its understanding of language patterns to generate responses.

Limitations and Challenges of LLMs

While LLMs have shown great promise, understanding their limitations is equally vital. Even top-notch models like ChatGPT can produce incorrect or nonsensical answers. Models like ChatGPT sometimes rely on outdated knowledge or can “hallucinate” facts; meaning, they could produce information that seems accurate but doesn’t exist in reality. This phenomenon showcases the need for human oversight.

Customizing Your ChatGPT Experience with Arsturn

One exciting development in the world of ChatGPT is the ability to create custom chatbots that leverage the same technology! Arsturn empowers users & businesses to take ChatGPT into their own hands, easily building tailored chatbots to fit their unique branding & objectives.

Why Arsturn?

- Instant Customization: With Arsturn, you don’t need coding skills to prompt thousands of personalized AIs based on ChatGPT algorithms.

- Adaptable: Whether you’re an influencer, local business, or a brand owner - Arsturn chatbot adapts to your specific needs, helping in handling FAQs, promotions, or even fan interactions.

- Insightful Analytics: Make informed decisions with deep insights about audience engagement & interests.

Future Prospects

The future for LLMs and platforms like ChatGPT looks promising. As technology advances, we’re likely to see models that can engage more deeply with humans, perhaps facilitating healthcare workflows, enhancing creative writing, & revolutionizing customer service interactions. Moreover, easily accessible interfaces like Arsturn will empower even those without tech expertise to leverage intelligence in customer interactions, making the benefits of AI widely available.

Conclusion

Understanding the language models behind ChatGPT reveals not just the intricacies of artificial intelligence but also the vast potential in revolutionizing how we communicate with machines. As LLM technology evolves, the implications for user experience, engagement, & interaction are significant. Opportunities abound, shaping the way businesses connect with audiences in today’s digital age. Why not give it a try? Create your customized chatbot today with Arsturn & unlock the potential of conversational AI for your brand!