8/27/2024

Setting Up Ollama with Microsoft PowerApps

In today’s rapidly increasing digital world, combining the power of AI with productivity tools is essential. Microsoft PowerApps and Ollama serve as great examples of tools that can be augmented to get the best of both. This guide will walk you through the process of setting up Ollama with Microsoft PowerApps step by step, so you can facilitate advanced data processing capabilities in your applications!

What is Ollama?

Ollama is a fantastic tool that allows you to run large generative AI language models (LLMs) locally. It's available for MacOS, Linux, and can also run on Windows using the Windows Subsystem for Linux (WSL). This means you can tap into some SERIOUS computational power, all while keeping everything on your own machine – no need to rely on the cloud! You can check out the full details on the Ollama official site!

Ollama works by pulling your desired models locally and serving them through a simple REST API. You can utilize Ollama models for a variety of tasks including text generation, data analysis, and much more – both efficiently and without incurring additional costs that come with cloud-based options.

Why Use PowerApps?

Microsoft PowerApps allows organizations to quickly build custom applications tailored to specific needs. With PowerApps, you can easily connect to various data sources, automate processes, and build applications that integrate seamlessly into the Microsoft ecosystem. The integration of Ollama into PowerApps enhances its capabilities, letting you use advanced AI-driven features like Natural Language Processing, data querying, and automated responses.

Benefits of Combining Ollama with PowerApps

Combining Ollama with Microsoft PowerApps offers several substantial advantages:

- Cost-Efficiency: Run complex AI tasks on your own hardware, which you’ll find way cheaper than cloud options.

- Privacy: Since everything runs on your local machine, sensitive data never leaves your premises.

- Speed: Eliminating HTTP call overhead means you get faster responses and less latency in your applications.

This setting can be ideal, especially for businesses dealing with sensitive information or those who want more control over their data processing.

Step-by-Step Guide to Setting Up Ollama with PowerApps

Step 1: Install Ollama

First, you need to have Ollama installed on your machine. Follow these steps:

- Go to the Ollama download page and select the appropriate version for your OS.

- After downloading, install Ollama by following the instructions corresponding to your operating system.

- Once installed, you can open your terminal and run:

1 2bash ollama serve &

This command will start your local Ollama server, allowing the models you've downloaded to be accessible.

Step 2: Download Your Desired Models

You'll need models to serve through Ollama. Some popular options include and . To download them, run:

This will pull down the models and get them ready for use.

1

Llama21

Mistral1

2

3

bash

ollama run llama2

ollama run mistralStep 3: Install and Configure Dev Tunnels

To connect PowerApps with Ollama, you can use Microsoft's Dev Tunnels tool to create a secure tunnel to your local instance. Here's how:

- First, install the Dev Tunnels tool using Winget:

1 2bash winget install devtunnel - Authenticate with the Microsoft platform by running:

1 2bash devtunnel user login - Now, create a tunnel to Ollama’s default port (11434) with the following command:

This will expose your Ollama instance externally through the tunnel.

1 2bash devtunnel host -p 11434 --allow-anonymous

Step 4: Building Your Power Apps

Now that Ollama is set up and running, it’s time to integrate it with Microsoft PowerApps.

- Open the PowerApps portal and create a new app or open an existing one.

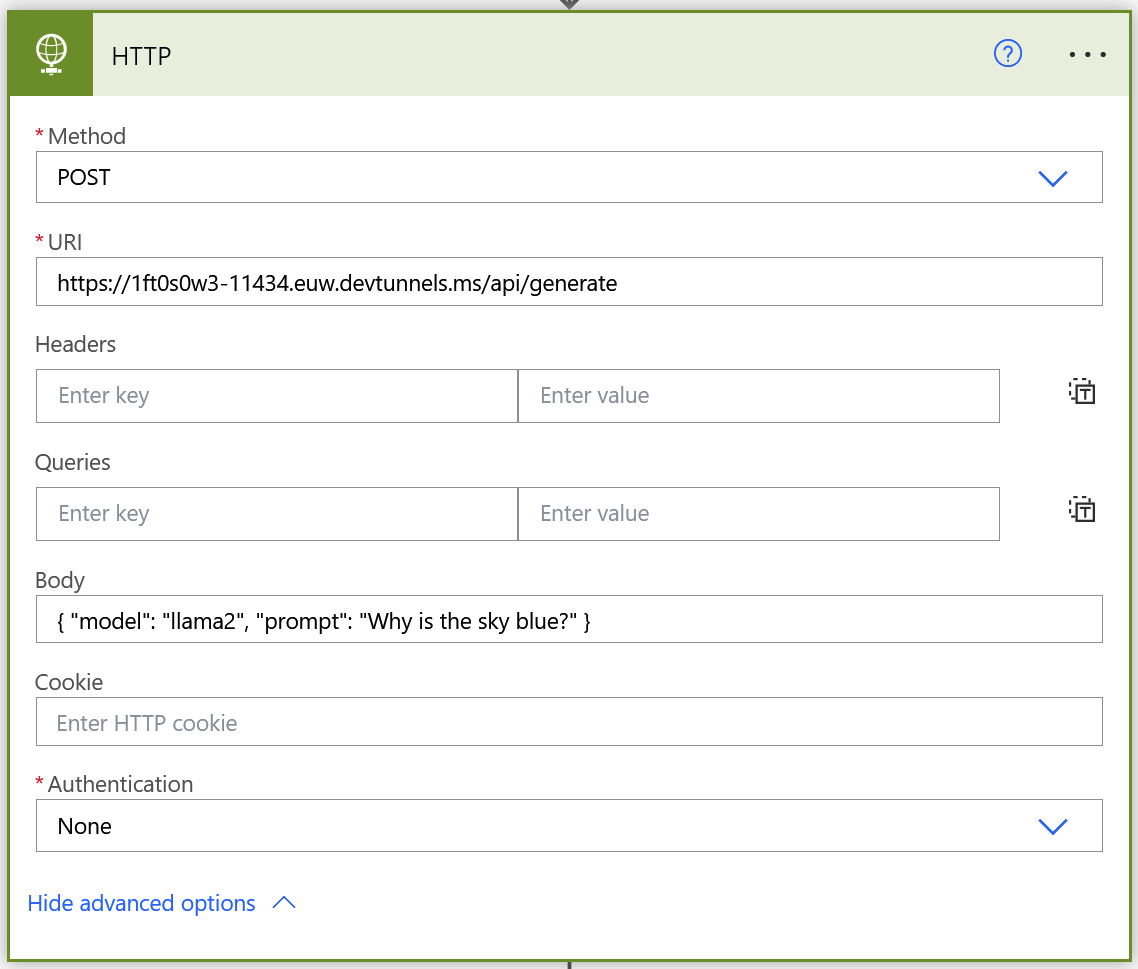

- To connect your app to Ollama, use the HTTP connector to establish the connection to your Ollama endpoint (the tunnel created in the previous step).

- Set up an HTTP action to call the URL of the Ollama server (retrieved from your Dev Tunnel). Include the endpoint which serves requests.

1/api/generate

- Next, specify the body of your HTTP request to send the data or prompts that you want Ollama to process. This would typically look like:

1 2 3 4 5 6json { "model": "llama3.1", "prompt":"Your query goes here", "stream": false } - Save your PowerApp and test the integration. You should see your Ollama model process the queries and return responses.

Step 5: Debugging and Optimization

After integrating, you might need to tune the settings for optimal performance based on your use case. Here are some tips:

- Verify that your PowerApps HTTP connection is correctly set up and the model is responding as expected.

- Use Dev Tunnel's debugging features to diagnose any connection issues, and ensure you receive HTTP 200 OK responses in your requests.

- Optimize the model performance by selecting the appropriate model based on your computational resources (smaller models for less powerful machines).

Additional Tips

- Ensure that your local Ollama instance remains running while testing PowerApps; otherwise, you won’t receive responses.

- Regularly check for model updates and improvements through the Ollama platform to take advantage of new features and enhancements.

Conclusion

Setting up Ollama with Microsoft PowerApps unlocks a WORLD of possibilities, enabling you to harness the power of AI in your applications efficiently. It caters to privacy concerns, saves costs, and enhances the overall functionality of your tools.

If you're looking to create conversational AI chatbots, check out Arsturn, which allows you to create custom ChatGPT chatbots to engage your audience. With its user-friendly platform and no-code integration, developing an AI chatbot has never been easier. Plus, they have great options for businesses and influencers to build meaningful connections with their audience! So, why wait? Give Arsturn a shot today!

Key Takeaways

- Safe and efficient setup of Ollama can transform your PowerApps into intelligent applications.

- Enjoy the benefits of direct processing and dynamic querying capabilities with LLMs.

- Improve your business workflow while ensuring data privacy and cost-effective solutions.

It's high time that you tap into the powers of Ollama with PowerApps, as it’s a match made in tech Heaven!