8/27/2024

Setting Up Ollama with Microsoft Power Automate

Introduction

Hey there! If you've been exploring the world of generative AI, you might have come across Ollama. It’s a FANTASTIC tool for running large language models (LLMs) locally. But what if I told you that you can connect it with Microsoft Power Automate? That's right! In this blog post, we'll dive deep into setting up a self-hosted Ollama instance and connecting it to Microsoft Power Automate using Dev Tunnels. So, buckle up, let's get started!

What is Ollama?

Ollama is a powerful tool that allows you to run generative AI language models directly on your local machine. It simplifies the process of testing, developing, and running these models without needing a complex cloud setup. To give you an idea, it's like having your personal AI assistant on your desktop!

If you haven't installed Ollama yet, don’t worry. We'll go through that step-by-step.

Why Use Microsoft Power Automate?

Microsoft Power Automate (formerly known as Microsoft Flow) is part of the Power Platform and is designed to automate workflows between your favorite apps and services. By connecting it with Ollama, you can harness the LLM capabilities to generate content, respond to queries, and much more – all while automating other tasks in your workflow. It’s a PERFECT match for those looking to enhance productivity.

Setting Up Ollama

Step 1: Install Ollama

To get started, you’ll need to install Ollama on your local machine. The tool runs smoothly on Windows 11 workstations, and installation is super easy. Just head over to the Ollama installation page and follow the instructions.

Once installed, you’ll want to start the server. You can do this by running the following command in your terminal:

This command starts the Ollama server in the background. Next, you can pull the models you want to work with. For instance, to work with Llama2, you can use:

This pulls the Llama2 model and starts it. Easy peasy, right?

1

2

bash

ollama serve &1

2

bash

ollama run llama2Step 2: Install Dev Tunnels

Now that we have Ollama set up, it’s time to incorporate Dev Tunnels. Dev Tunnels is a tool from Microsoft that creates secure tunnels to your local applications, allowing you to connect them to the internet. This will help us expose our Ollama instance to Power Automate.

To install Dev Tunnels, you'll need to use Winget. Just run this command in your terminal:

Once installed, authenticate with your Microsoft account:

1

2

bash

winget install devtunnel1

2

bash

devtunnel user loginStep 3: Running Dev Tunnels

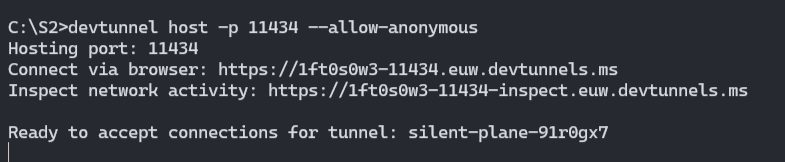

Next, you’ll need to configure Dev Tunnels to connect to your Ollama instance. Since Ollama runs on port 11434, run the following command in your terminal to open a tunnel:

This will provide you with two URLs: one for connecting your Ollama setup to the cloud, and the other for debugging traffic. Keep the debugging link open, as we’ll come back to it later.

1

2

bash

devtunnel host -p 11434 --allow-anonymous

Connecting Ollama with Power Automate

With your Ollama instance running and Dev Tunnels set up, it’s time to connect everything to Microsoft Power Automate.

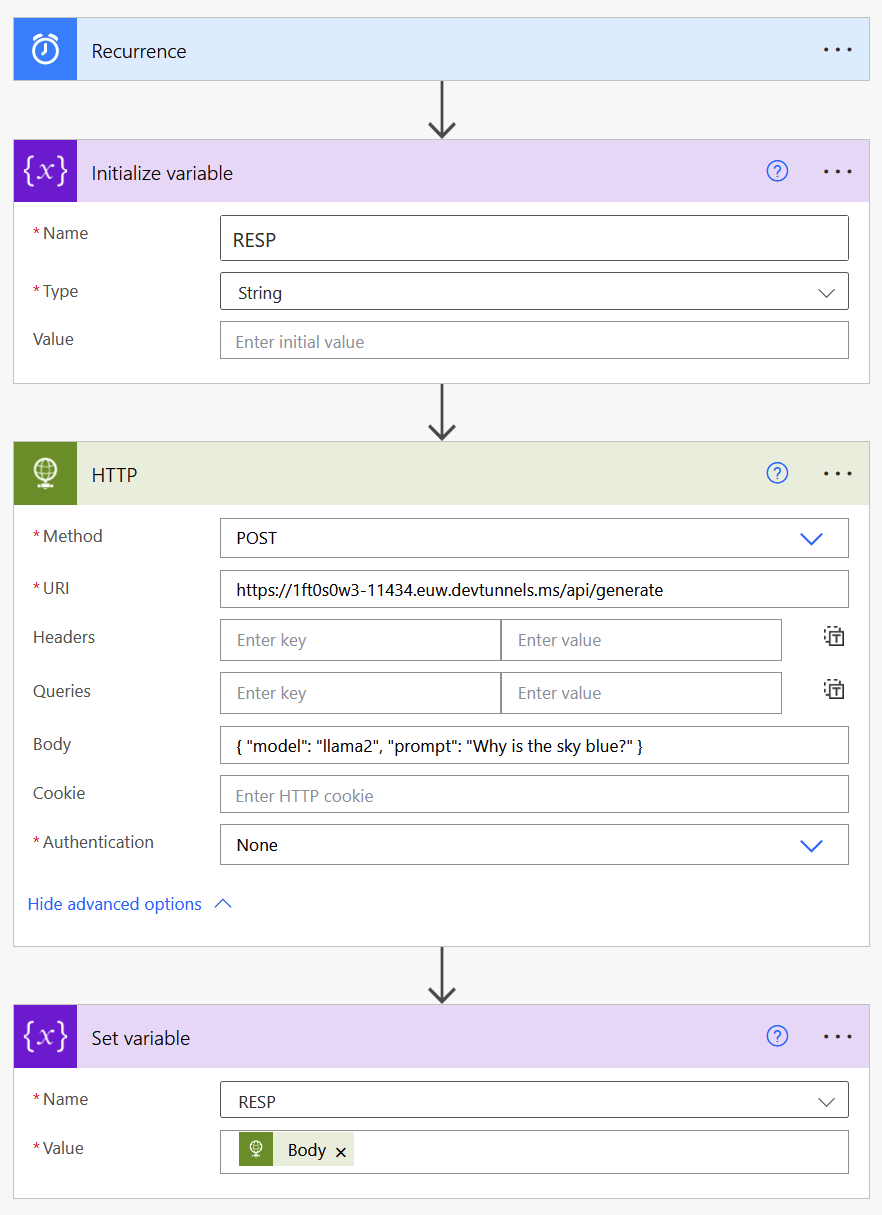

Step 1: Creating a Power Automate Flow

- Open Power Automate and create a new flow. You can start by building an instant cloud flow – this is perfect for testing connections quickly.

- Next, add an HTTP connector action to your flow. This action will allow you to send requests to your Ollama instance.

- For the HTTP action, configure it as follows:

- Method: POST

- URI: Use the Dev Tunnel URL you obtained earlier, appending (e.g.,

1/api/generate).1https://your-tunnel-url/api/generate - Headers: Content-Type should be set to application/json.

- In the Body section of the HTTP request, include whatever model expects. For example, if you are prompting Llama2 with a specific inquiry, format it like this:

1 2 3 4json { "prompt": "What is the weather forecast for tomorrow?" } - Click on Save to finalize your flow setup.

Step 2: Capturing Ollama Output in Power Automate

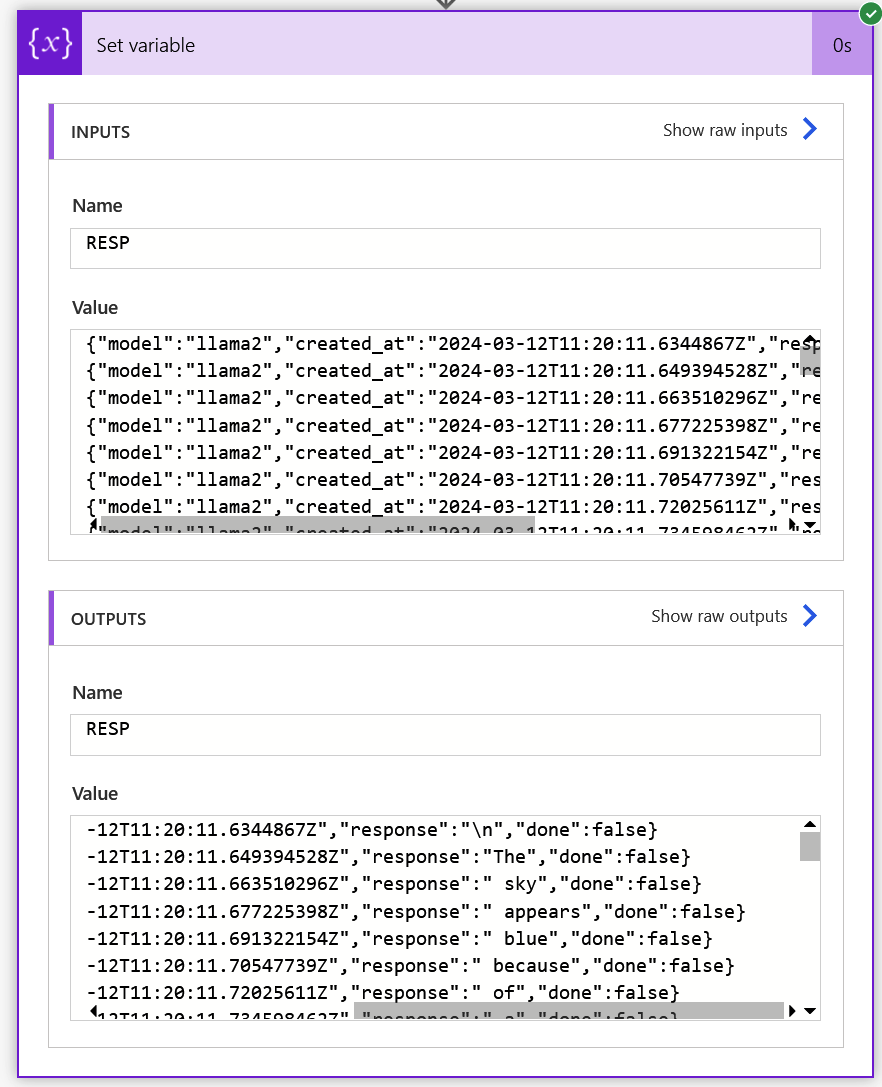

Let’s capture the output returned by Ollama in your flow. After the HTTP request action:

- Add a new action to parse the output by utilizing the Compose action or set it as a variable.

- When you run this flow, it will trigger the Ollama model, and you should see the results returned under the variable or output section.

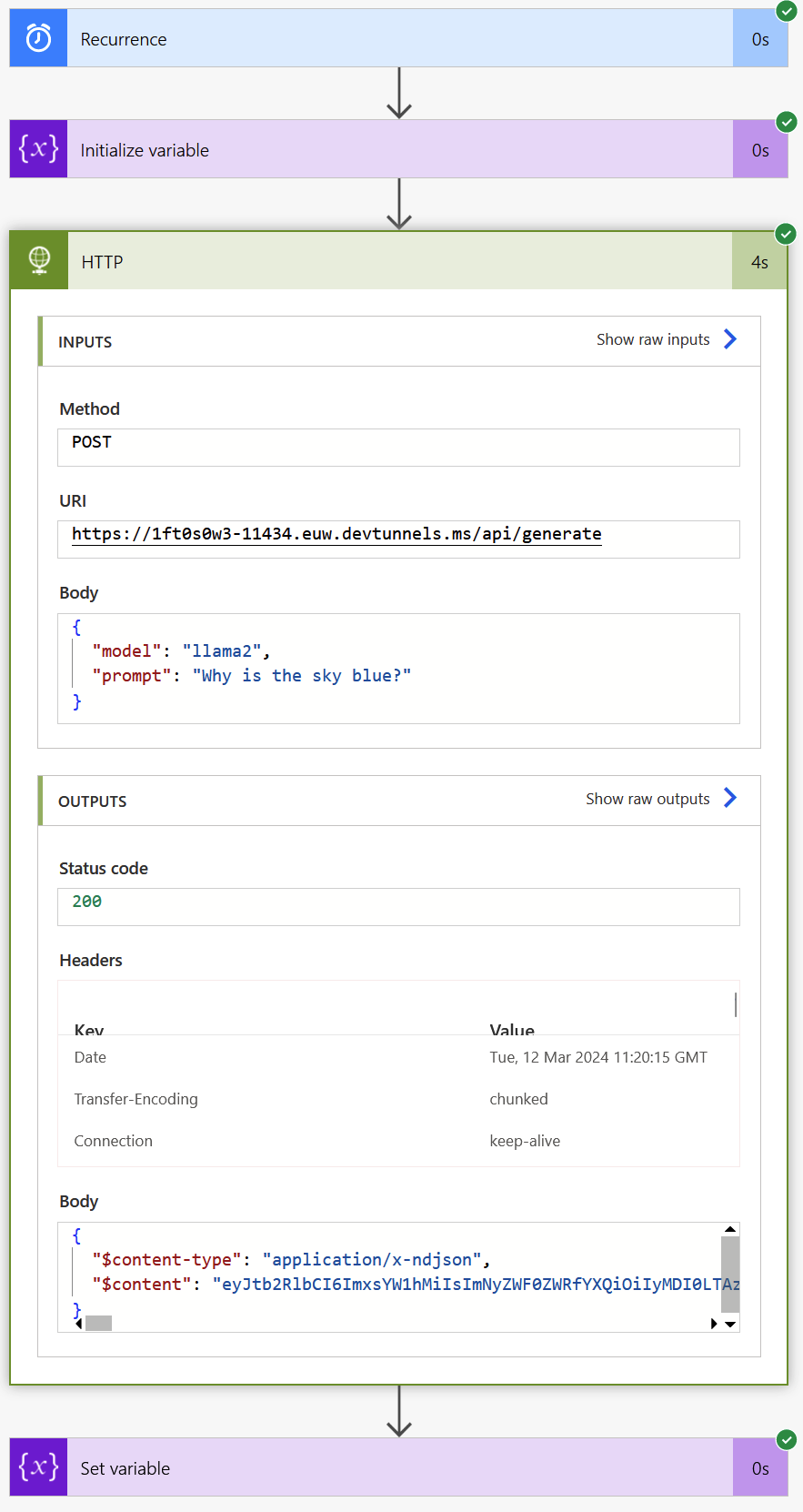

Testing Your Setup

With everything connected, you can now run your flow manually. Once executed, check the output of your request:

- If configured correctly, you should receive an response indicating everything is functioning.

1HTTP 200 OK - You can also review the data returned from your Ollama model in the Power Automate dashboard.

Step 3: Debugging with Dev Tunnels

For debugging, you can keep reviewing the output from the debugging link provided by Dev Tunnels. That link will show you any incoming requests made to Ollama, which is particularly useful for troubleshooting issues.

Benefits of Using Ollama with Power Automate

- Seamless Automation: By connecting Ollama with Power Automate, you simplify repetitive tasks, streamline operations, and enhance productivity.

- Cost-Effective: Using a self-hosted instance of Ollama mitigates the utilization of expensive cloud services, particularly if you are managing significant workloads with AI models.

- Flexibility: Customizing responses through Power Automate allows for unique workflows tailored to your business needs.

Conclusion

Setting up Ollama with Microsoft Power Automate can drastically improve your automation tasks, enhance productivity, and provide a cost-effective solution for leveraging the power of large language models directly from your local machine. If you’re interested in EXPANDING your chatbot capabilities, be sure to check out Arsturn where you can instantly create custom AI chatbots for your website. This tool boosts engagement, streamlines operations, and serves as an excellent addition to your digital arsenal.

By following these simple steps, you’re now equipped to take advantage of AI automation using Ollama and Microsoft Power Automate. Let the automation journey begin!

Feel free to reach out with any questions as you dive into this exciting setup. HAPPY AUTOMATING!