8/26/2024

LlamaIndex RAG: A New Approach to Data Management

In the world of generative AI, data management plays a crucial role in maximizing the effectiveness of Large Language Models (LLMs). As the demand for smarter applications grows, so does the need for efficient ways to manage data. One pioneering approach is Retrieval Augmented Generation (RAG), particularly as utilized by LlamaIndex. This framework not only enhances query responses by retrieving relevant data but also streamlines the overall data management process in a more organized manner. Let’s take a closer look at this innovative approach.

Understanding LlamaIndex RAG

At its core, RAG combines two critical functions: retrieval & generation. While traditional LLMs leverage vast datasets during training, they often lack access to more specific or private data from organizations. This is where LlamaIndex steps in. By harnessing RAG techniques, LlamaIndex allows users to connect their unique datasets with powerful LLMs.

Key Use Cases of RAG in LlamaIndex

With LlamaIndex, there are endless applications for RAG, broadly categorized into four main use cases:

- Structured Data Extraction: Using Pydantic extractors, structured data can be extracted from various unstructured sources like PDFs, websites, etc. This capability enables automated workflows efficiently, filling missing pieces of data in a type-safe manner.

- Query Engines: LlamaIndex provides query engines that facilitate the end-to-end flow of asking questions about data. Users can pass natural language queries, allowing the integration of context and an LLM to generate coherent & relevant answers. This seamless interaction enhances the user experience significantly.

- Chat Engines: Engaging conversations are possible with LlamaIndex’s chat engines. These engines support multi-turn interactions to create more dynamic and informative conversations compared to single Q&A.

- Agents: LlamaIndex facilitates the development of agents that act as intelligent decision-makers powered by LLMs, capable of dynamically choosing actions based on incoming data and queries.

The Stages of RAG

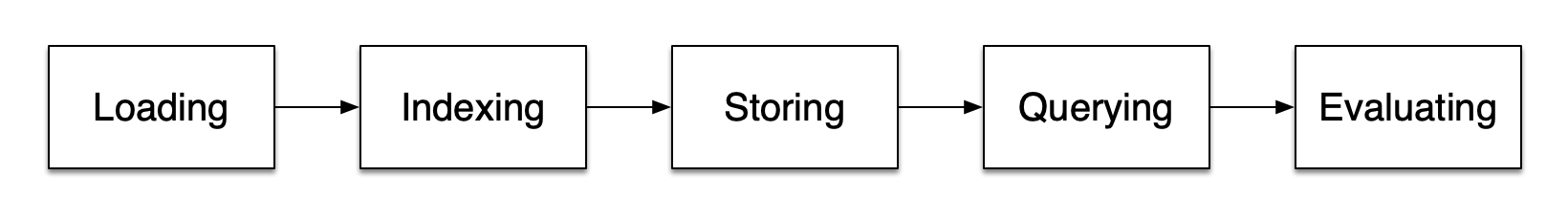

Using LlamaIndex for implementing RAG typically involves five key stages:

- Loading Data: The first step is getting the data into the system from various sources like text files, databases, APIs, or websites. Tools like LlamaHub provide hundreds of connectors to streamline this process.

- Indexing Data: Once the data is loaded, creating a structured data representation makes retrieval efficient. This usually means generating vector embeddings, which represent the data numerically while also including metadata strategies for better query management.

- Storing Data: After indexing, it’s important to store the indexed data along with associated metadata. This prevents the need to re-index the data upon every new query.

- Querying: With the data organized, LlamaIndex offers multiple querying strategies using its versatile structures. This allows highly specific sub-queries or hybrid approaches to retrieve the right data efficiently.

- Evaluation: Finally, the retrieved answers need to be evaluated for accuracy. This critical step helps ensure that the retrieval strategies are effective, providing fast, accurate responses.

Key Concepts in LlamaIndex RAG

When working with LlamaIndex, there are several important concepts and terminologies to understand:

Loading Stage

- Nodes and Documents: Within the loading stage, a serves as a container for the data source, while a

1Documentis a basic unit representing that data. This atomic structure allows for smooth processing and indexing of data chunks to enhance retrieval efficiency.1Node - Connectors: These refer to various data ingesting methods or tools (readers) that help in collecting data from different formats.

Indexing Stage

- Indexes: After ingesting data, LlamaIndex helps create indexes for easy retrieval. These indexes usually involve stored vector embeddings that capture the essence of the dataset.

- Embeddings: This crucial step uses LLM-generated numerical representations of data, allowing for similarity assessments between various pieces of content based on their embeddings.

Querying Stage

- Retrievers and Routers: The retriever component is responsible for efficiently fetching relevant contexts from the index when given a query. Routers determine which retriever best suits the incoming query, ensuring that the most effective strategy is being used.

- Node Postprocessors & Response Synthesizers: These components help refine retrieved data or synthesize a response from the outputs, providing a seamless user experience.

Arsturn: A Companion Innovation

To complement your LlamaIndex RAG implementation, consider leveraging Arsturn, a platform designed to create bespoke AI chatbots without any programming skills! With Arsturn, you can effectively manage interactions with your audience across various digital channels, utilizing the insights gleaned from your LlamaIndex data management strategies.

Key Benefits of Using Arsturn Include:

- No-Code Chatbot Builder: Effortlessly create your custom chatbot tailored to your brand’s needs, ensuring a seamless integration experience.

- Analytics Insights: With Arsturn, analyze conversations to understand user behavior better and refine your strategy based on insightful data.

- Customizable Engagement: Make your chatbot reflect your unique brand identity, bolstering your engagement efforts.

Imagine providing instant responses to customer queries that feed directly into your product reviews or data insights extracted using LlamaIndex RAG. The combination offers a comprehensive solution for enhancing user engagement and boosting conversions!

RAG and Traditional Data Management

The introduction of RAG through LlamaIndex represents a significant shift from traditional data management approaches. Here’s how:

- Handling of Larger Datasets: Traditional systems often struggle with vast amounts of unstructured data, relying on manual queries and processing. RAG effortlessly manages such datasets by directly indexing and querying, proving to be a step forward in terms of efficiency.

- Dynamic Data Use: Unlike traditional systems that require constant updating and re-indexing, RAG enables real-time data use without cumbersome overheads, optimizing the responsiveness of your applications.

- Enhanced User Experience: While traditional platforms provide static responses based on limited sets of pre-structured content, LlamaIndex enhances this with dynamic retrieval, allowing users to engage more richly with data.

Best Practices to Optimize RAG Performance

For businesses looking to implement RAG via LlamaIndex effectively, consider the following best practices:

- Optimize Prompt Engineering: Ensure that your prompts are tailored to extract the most relevant information efficiently using LlamaIndex’s capabilities.

- Choose the Right Chunk Sizes: Properly sized data chunks can significantly enhance retrieval times and relevance, so experiment to find the optimal size.

- Leverage Structure in Retrieval: Utilize structured retrieval methods when scaling larger datasets or complex retrieval tasks to achieve better outcomes.

- Continual Evaluation: Regularly evaluate your RAG system’s performance metrics to adjust and improve your overall strategy, ensuring your setup stays efficient as you grow.

Conclusion

LlamaIndex RAG revolutionizes how we approach data management for AI applications, streamlining traditional processes and enhancing user engagement. By effectively combining rich data retrieval and generation, organizations can extract meaningful insights while maintaining responsiveness—truly the future of data management in AI applications. To fully realize this potential, consider tools like Arsturn for creating instant engagement with your audience.

Get started today, and empower your brand with an engaging AI chatbot while utilizing the amazing capabilities of LlamaIndex RAG for your data management needs!