8/26/2024

Deep Dive into DBRx and Ollama: The Current Landscape of AI Tools

The landscape of Artificial Intelligence (AI) is rapidly evolving, with new innovations rolling out at breakneck speed. Among the most notable recent developments are Databricks' DBRx, a groundbreaking language model, and Ollama, a user-friendly platform that simplifies running large language models. Both tools redefine the way enterprises and individual users can leverage AI capabilities, presenting a fascinating juxtaposition of sophisticated architecture and accessibility. In this post, we'll dive into the nuances of DBRx and Ollama, showcasing their unique features, architecture, use cases, and industry implications.

Understanding DBRx: A New Standard in AI Language Models

Databricks launched DBRx on March 27, 2024, marking a monumental shift in the world of open-source AI. Designed to outshine leading models like GPT-3.5 and others, this model is grounded in advanced mixture-of-experts (MoE) architecture, which allows it to deliver high performance while remaining computationally efficient. Here are the key details surrounding DBRx:

The Architecture of DBRx

DBRx is a transformer-based decoder-only large language model with a startling 132 billion total parameters, of which 36 billion parameters are active during use. Some technical specifications include:

- Pre-Training: Over 12 trillion tokens of meticulously curated text and code data. This extensive training allows DBRx to excel in understanding and generating language queries.

- Context Length: An impressive context window of up to 32,000 tokens, allowing it to work on lengthy queries that involve HOLDING more information.

- Performance Advantage: DBRx operates at twice the efficiency of previous leading models, significantly enhancing response speeds. It's capable of producing text at 150 tokens per second per user, which positions it as a competitive option for organizations wanting fast and reliable AI responses.

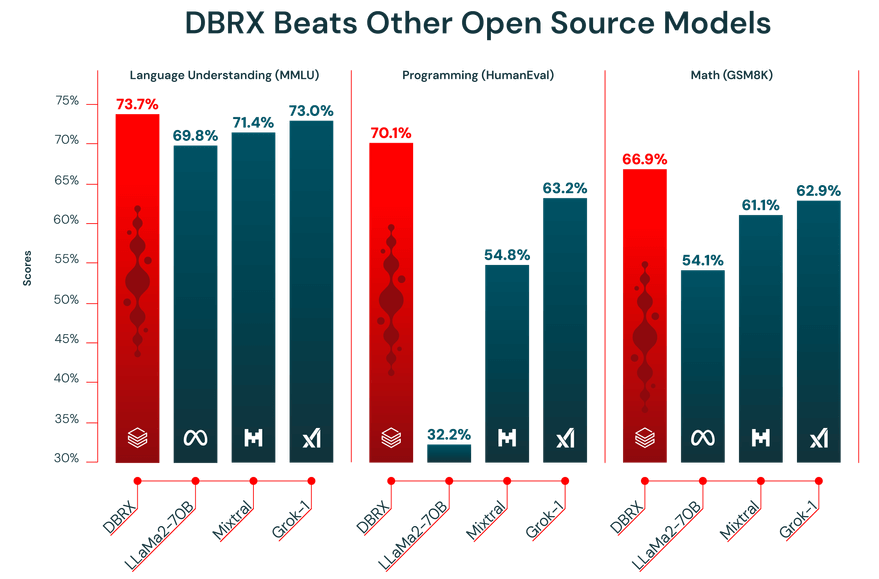

Figure 1: DBRx's performance metrics against competitors shows it's an industry leader.

Comparing DBRx Against Competitors

In tests measuring language understanding (MMLU), programming (HumanEval), and math (GSM8K), DBRx consistently surpassed its peers. For example, its performance in the MMLU benchmark reached a score of 73.7%, outperforming many of its rivals.

- MMLU Score: 73.7%

- HumanEval Score: 70.1%

- GSM8K Score: 66.9%

The incredible architecture and substantial data underpinning DBRx not only make it a benchmark in the industry but also empower enterprises to train and fine-tune their own models without relying on proprietary systems. This democratization of AI tools means organizations can tailor solutions based on their specific needs, skills, and data.

Use Cases for DBRx

DBRx’s versatility lends itself to countless applications:

- Customized AI Assistants: Companies can develop tailored virtual assistants capable of managing customer inquiries effectively while providing a unique brand voice.

- Internal Knowledge Management: Enterprises can utilize DBRx to streamline employee access to information across various systems, improving internal workflows and reducing time spent on searching for data.

- Data-Driven Decision Making: DBRx can assist in making sense of large sets of data, providing summaries or insights that would otherwise require hours of manual analysis.

Introducing Ollama: Simplifying Local LLM Operation

On the flip side of the landscape is Ollama, an open-source application designed to make it easy for users to run large language models (LLMs) on their devices. It’s aimed at both enthusiasts and professionals who want to harness the power of AI without the complexities of heavy infrastructure. Here's what Ollama has to offer:

What Exactly is Ollama?

Ollama provides a user-friendly platform that allows users to run various models locally, such as Llama 3.1, Mistral, and Gemma 2. It supports compatible models in an intuitive manner, acting as a bridge that brings powerful AI capabilities to everyday users. Here's a quick summary of Ollama's features:

- Local Data Control: Users can run models directly on their machines, keeping sensitive data safeguarded within local networks. This significantly reduces risks that come with data breaches, which could occur when data is processed in the cloud.

- Cost Efficiency: By avoiding ongoing cloud fees, businesses can save money while gaining control over their model usage. Totally optional subscription fees based on functionality mean increased flexibility.

- Multimodal Support: Ollama supports processing both text and image data, aiding various applications in content creation, coding, and even basic image recognition tasks without needing cloud resources.

Figure 2: Users can seamlessly access various models through Ollama's interface.

Key Features of Ollama

Here's a more in-depth look at the key features that set Ollama apart:

- Effortless Installation: Users can quickly install Ollama, enabling them to start running LLMs on macOS, Linux, or Windows with just a single command line.

- Model Library: Ollama includes a growing library of pre-trained models, offering the ability to easily switch between models as required.

- Customization Options: Users can create tailored responses through adjustable settings such as temperature and system messages, allowing them to tweak performance based on the context and goals.

- Insightful Analytics: Gain valuable insights and metrics on how users interact with the LLMs, offering data to refine brand strategies over time.

Use Cases for Ollama

Ollama can act as an efficient tool in multiple verticals:

- Small Business Applications: Local businesses can utilize Ollama to manage customer interactions, engage users with local events, and generate informative content.

- Academic Research: Students and researchers can use Ollama to run models that assist in research queries, giving them a powerful assistant right on their laptops.

- Creative Writing: Writers can use Ollama to overcome writer’s block, generate story ideas, or even co-write content, enhancing their personal workflow.

- Development and Code Generation: Developers can leverage Ollama to assist in programming tasks by generating boilerplate code snippets in various programming languages.

Comparative Analysis: DBRx vs. Ollama

While both DBRx and Ollama push the envelope in AI, they cater to different audiences with unique goals and capabilities. Here’s a breakdown of their key differences:

| Feature | DBRx | Ollama |

|---|---|---|

| Target Users | Enterprises looking for bespoke solutions | Individuals and small businesses |

| Deployment | Cloud-based (Databricks) | Local on user machines |

| Customization | Highly customizable with proprietary data | User-friendly customization options |

| Performance | State-of-the-art efficiency using MoE | Efficient but can vary based on hardware |

| Cost | Generally subscription-based | Flexible pricing, with a free option |

| Models Available | Custom foundation models built by enterprises | Pre-trained models available for public use |

Why Use Arsturn?

Both DBRx and Ollama are GAME CHANGERS in the world of AI, providing users with amazing tools to boost productivity, efficiency, and insights. However, for businesses aiming to harness these tools even further, there's a fantastic solution: Arsturn. Arsturn allows you to create custom chatbots effortlessly using ChatGPT, enhancing engagement & conversions across digital channels.

Why Arsturn?

- No Coding Required: Even if you’re not tech-savvy, you can design chatbots tailored to your brand’s voice in only a few clicks!

- Full Customization: Fine-tune the conversational AI to meet your specific needs; the approach is much more adaptable compared to generic systems.

- Insightful Analytics: Track how users are interacting with your chatbot, providing valuable data that can help optimize your marketing strategies.

Arsturn is perfect for influencers, local businesses, and anyone looking to enhance their audience engagement through conversational AI. Just visit Arsturn.com today and unlock these capabilities for your brand!

Conclusion

In this ever-evolving tech landscape, both Databricks’ DBRx and Ollama are carving out prominent roles. They provide new tools for enterprises and individuals alike to tap into the power of AI. As organizations continue to embrace open-source models and locally-run solutions, the future looks promising. Whether you're a large enterprise looking for the next big AI foundation layer or an individual excited to experiment with LLMs, there's a place for you in this vibrant ecosystem. So, gear up, start experimenting, and unleash the potential of AI tools like DBRx, Ollama, and Arsturn today!